Note: This guide contains example code with placeholder values for tokens, API keys, and credentials. These are illustrative examples only and do not contain real secrets.

Most teams aren't ready for what's coming.

Autonomous agents aren't just prototypes anymore. They're parsing docs, calling APIs, triaging support tickets—and doing it all while running 24/7 in prod. But while the AI layer is getting smarter, the plumbing around it is falling behind.

Ask around and you'll hear the same story: each team hand-rolls identity flows, secrets management, and policy enforcement. Every new agent spins up another snowflake.

It doesn't scale.

We've been here before. Web services hit the same wall a decade ago. The answer was a shared layer: HTTP, OAuth, JWTs, Envoy, Vault, OPA.

It's time to give agents the same treatment.

We can build a control plane that feels like HTTP for agents—with identity, policy, and secrets as first-class citizens. This guide assumes a working knowledge of cloud-native concepts like microservices, APIs, containers, and familiar tools like Docker, Kubernetes, Auth0, OPA, and Vault.

Here's how.

1. The Vision

Right now, agent infrastructure is fragmented.

- Every team rolls their own onboarding logic

- Policies live in GitHub READMEs

- Secrets are long-lived and manually rotated

- Audit trails? If you're lucky.

That's a problem.

What We Actually Need

We need a shared runtime model for agents, where:

- Identity is established via OAuth2 or SPIFFE

- Policy is enforced via sidecars and Rego bundles

- Secrets are short-lived and injected automatically

- Every action is observable and scoped by identity

If we get this right, developers can spin up new agents in minutes. Security gets auditability and control. Infra teams stop reinventing the wheel.

Just like HTTP gave us a common language for web services, this stack gives us a common language for agents.

The Pain Points Today

Let's be brutally honest about where we are:

- Identity is an afterthought: Most AI agents today run with static API keys or service account credentials with little-to-no fine-grained access control. Rotating credentials is a manual process that often gets neglected.

- Isolation is poor: Agents often share environments, credentials, and execution contexts, making it nearly impossible to attribute actions to specific agents or contain blast radius during incidents.

- Permissions are binary: Agents either have access to everything within a system or nothing at all. There's rarely any contextual authorization based on the task being performed or the data being accessed.

- Auditability is limited: When something goes wrong, it's hard to trace exactly what happened, which agent took which action, and under what context.

- Onboarding is complex: Setting up a new agent requires coordinating across multiple teams, manually configuring credentials, and documenting tribal knowledge.

Without solving these problems systematically, we're setting ourselves up for security incidents, compliance nightmares, and scalability bottlenecks. The sooner we address this, the less technical debt we'll accumulate.

2. The Architecture Overview

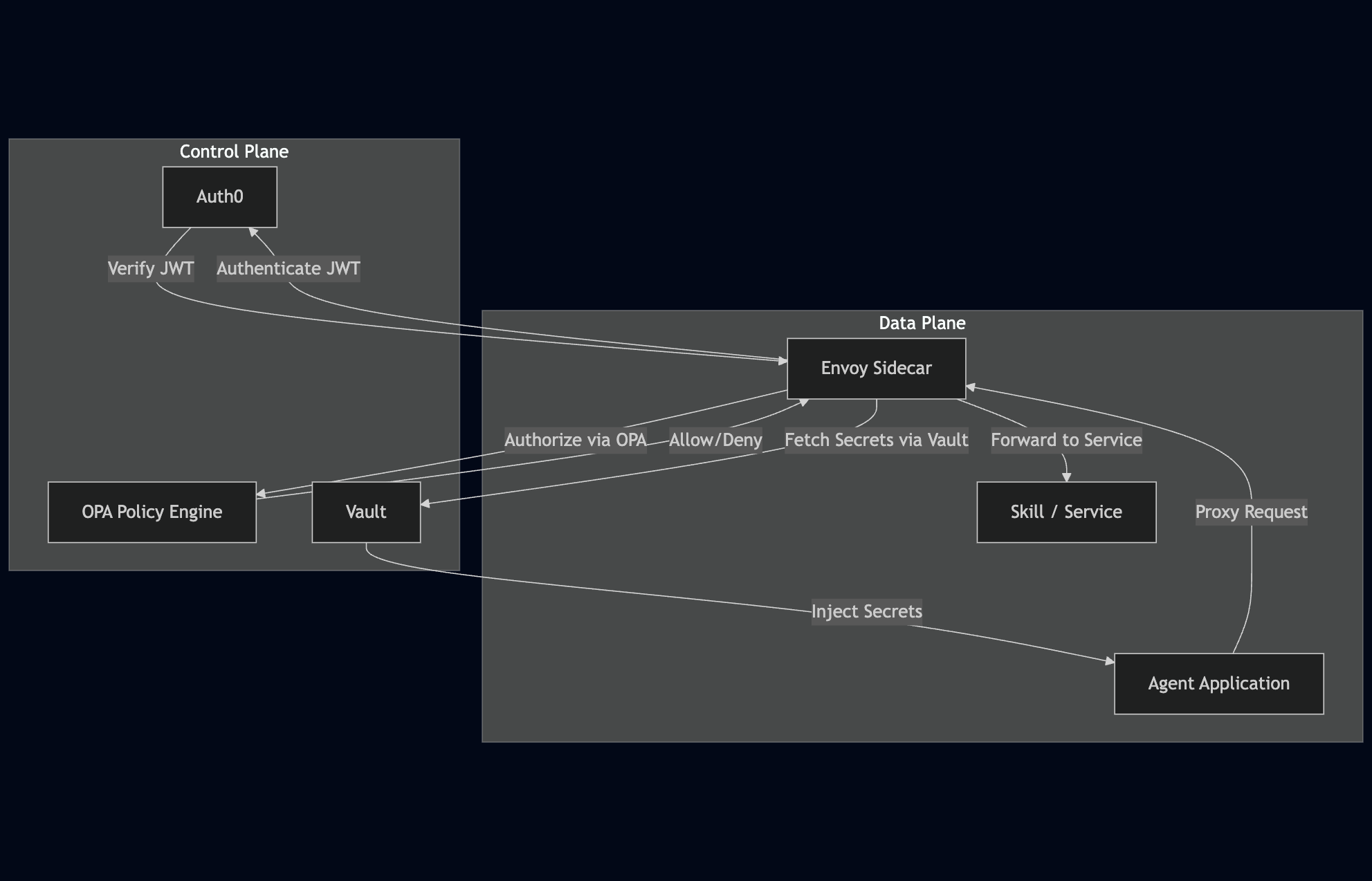

Before diving into the components, let's visualize the flow. An Agent interacts with the rest of the world only through its local Envoy sidecar. The sidecar acts as a policy enforcement point (PEP) and interacts with control plane services (Auth0, OPA, Vault) to make decisions before proxying the request to an upstream Skill or Service.

Figure 1: High-level architecture

This sidecar pattern provides a clean separation of concerns: the agent focuses on its core logic, and the sidecar handles the cross-cutting infrastructure concerns of identity, policy, secrets, and observability.

3. The Core Components

Let's break down the key pieces of the control plane: Identity, Policy, and Secrets.

3.1 Identity with Auth0 (or SPIFFE)

Every agent needs to authenticate securely—ideally using short-lived tokens that are easy to verify and hard to misuse. Auth0 provides a robust identity platform supporting standard protocols like OAuth2/OIDC.

You'll need:

- An Auth0 Resource Server representing your control plane API audience.

- A Machine-to-Machine (M2M) Application configured for each agent, using the Client Credentials flow.

## Define the audience for your agent control plane API

resource "auth0*resource*server" "mcp*api" {

identifier = "https://mcp.example.com/" # This is the API audience agents will request access to

name = "AI Agent Control Plane"

signing*alg = "RS256"

token*lifetime = 3600 # Tokens valid for 1 hour

skip*consent*for*verifiable*first*party*clients = true

token*dialect = "access*token*authz" # Include permissions and custom claims in the token

# NOTE: 'token*dialect' might be specific; the general goal is to embed permissions/claims.

# This is often configured via RBAC settings (e.g., "Add Permissions in the Access Token")

# or Auth0 Actions/Rules for more complex claim manipulation. Verify with current Auth0 docs.

# Define scopes representing capabilities or permissions within the control plane

scopes {

value = "skills:invoke"

description = "Invoke registered skills"

}

# ... other scopes ...

scopes {

value = "data:write"

description = "Write to registered data sources"

}

}

## Define a machine-to-machine application representing a specific agent

resource "auth0*client" "agent*app" {

name = "support-triage-agent"

app*type = "non*interactive" # Machine-to-machine application

grant*types = ["client*credentials"]

token*endpoint*auth*method = "client*secret*post"

jwt*configuration {

lifetime*in*seconds = 3600

alg = "RS256"

}

# Custom metadata added to the agent's identity, included in the JWT payload

client*metadata = {

agent*type = "support"

team = "customer-success"

environment = "production"

}

}

## Grant the agent application specific permissions (scopes) for the control plane audience

resource "auth0*client*grant" "agent*permissions" {

client*id = auth0*client.agent*app.id

audience = auth0*resource*server.mcp*api.identifier

scope = ["skills:invoke", "data:read"] # This agent can invoke skills and read data

}

#### Flow:

1. The Agent application, using its `client*id` and `client*secret`, makes an OAuth2 Client Credentials request to Auth0's `/oauth/token` endpoint.

1. Auth0 authenticates the agent and issues a short-lived JWT access token.

1. The agent includes this JWT in the `Authorization: Bearer <token>` header for all requests it makes to services via its sidecar.

#### JWT Claims Structure:

The JWT issued by Auth0 contains critical metadata for your authorization system:

```json

{

"iss": "https://your-tenant.auth0.com/", // Issuer (your Auth0 tenant)

"sub": "client-id@clients", // Subject (the agent's client ID)

"aud": "https://mcp.example.com/", // Audience (your control plane API)

"iat": 1683026400, // Issued At timestamp

"exp": 1683030000, // Expiration timestamp

"azp": "client-id", // Authorized party (client ID again)

"gty": "client-credentials", // Grant type used

"permissions": [

"skills:invoke",

"data:read"

], // Scopes granted by Auth0

"https://mcp.example.com/agent*type": "support", // Custom claims from client*metadata

"https://mcp.example.com/team": "customer-success",

"https://mcp.example.com/environment": "production"

}

These claims provide rich context for policy decisions downstream (in OPA), enabling fine-grained access control based on:

- Who the agent is (the `sub` claim identifies the agent)

- What it's generally allowed to do (the `permissions` claim)

- What context it's operating in (custom claims like `team`, `environment`, `agent*type`)

#### Alternative: SPIFFE for Zero Trust

For organizations already invested in a service mesh like Istio or prefer a more Kubernetes-native approach focused on workload identity, SPIFFE (Secure Production Identity Framework for Everyone) offers a compelling alternative. SPIFFE provides cryptographically verifiable short-lived identities (`spiffe://<trust*domain>/...`) to workloads, often delivered via a mechanism like the SPIFFE Workload API and X.509-SVIDs.

```yaml

apiVersion: spiffeid.spiffe.io/v1beta1 # Check for latest API version

kind: SpiffeID

metadata:

name: support-triage-agent

namespace: agents

spec:

spiffeId: spiffe://your-trust-domain.com/agent/support-triage # Explicit SPIFFE ID

parentId: spiffe://your-trust-domain.com/k8s-workload-registrar # Parent ID (e.g., registrar)

# dnsNames: # DNS names are often automatically generated or configured elsewhere

# - support-triage-agent.agents.svc.cluster.local # Common DNS representation

# Select the Kubernetes pods that should receive this identity

selector:

podSelector: # Use podSelector in newer APIs

matchLabels:

app: support-triage-agent

environment: production

# Optional: Federation configuration if interacting with external trust domains

# federatesWith:

# - trustDomain: example.com

# bundleEndpointURL: https://spiffe-bundle.example.com

In a SPIFFE-based setup, the Envoy sidecar would use mTLS with the SPIFFE identity to authenticate the agent workload. OPA policies could then use the extracted SPIFFE ID (e.g., from the client certificate URI SAN) as the subject for authorization decisions, potentially fetching additional metadata about the workload from a separate identity registry if needed. Both Auth0 JWTs and SPIFFE provide strong, verifiable identity signals that are crucial for the authorization layer.

### 3.2 Policy with OPA + Envoy

Use [Open Policy Agent (OPA)](https://www.openpolicyagent.org/) to write policy bundles in Rego. Unlike hardcoded `if/else` rules within services, Rego gives you a declarative language for expressing complex, context-aware authorization logic externalized from your application code. Envoy acts as the Policy Enforcement Point (PEP), querying OPA (the Policy Decision Point or PDP) for authorization decisions before forwarding requests.

#### Base Policy Structure (Rego)

```rego

package mcp.authz # Define the policy package

import future.keywords # Enable future keywords like 'if' and 'contains'

import input.jwt as token # Alias input.jwt for easier access to JWT payload

## --- Default Decision ---

## Default deny everything unless explicitly allowed by another rule.

default allow := false

## --- Common Conditions ---

## Basic checks for token validity and expiry are fundamental.

token*valid := token.valid == true

token*not*expired := time.now*ns() < (token.payload.exp * 1000000000) # JWT exp is in seconds

## --- Core Allow Rule ---

## A request is allowed if the token is valid, not expired, AND passes specific permission checks.

allow if {

token*valid # Is the token structurally valid and trusted? (checked by Envoy JWT filter)

token*not*expired # Has the token expired?

has*permission*for*action # Does the token's permissions allow this *type* of action?

# Note: Additional context-specific checks (service, env, team) are separate allow rules below.

}

## --- Permission Check: Action Type ---

## Checks if the agent's JWT permissions include the scope required for the request path.

## Example: A request to /mcp://skill-name/invoke*skill requires the "skills:invoke" permission.

has*permission*for*action if {

# NOTE: Relying on fixed path indices (input.request.path[n]) can be brittle.

# Consider using regex matching or having the Lua filter extract 'action'

# into a dedicated field (e.g., input.request.action) for robustness.

path*parts := input.request.path

count(path*parts) > 3 # Basic check for expected structure

action*verb := path*parts[3] # Assuming path structure like /mcp://service/action/...

# Map action verbs to required OAuth2 scopes (permissions)

action*permission*map := {

"invoke*skill": "skills:invoke",

"access*tool": "tools:access",

"read*data": "data:read",

"write*data": "data:write",

"list*skills": "skills:list",

"status": "system:status" # Example for a system-level check

}

required*permission := action*permission*map[action*verb] # Lookup the required scope

# Check if the required permission is present in the agent's token payload

token.payload.permissions contains required*permission

}

## --- Permission Check: Service-Specific ---

## This rule allows access based on the *specific service* being called,

## potentially requiring permissions *in addition* to the general action permission.

allow if {

token*valid

token*not*expired

# NOTE: Brittle path parsing, same as above.

service := input.request.path[2] # Assuming path structure /mcp://service/action/...

# Check if agent has the required service-specific permission(s)

has*service*permission(service)

}

## Helper function to check for service-specific permissions

has*service*permission(service) if {

# Define a map of services to lists of required permissions

# Agent must have ALL permissions in the list for that service

service*permissions := {

"customer-data": ["data:read", "customer:access"],

"billing-system": ["billing:read", "finance:access"],

"support-tools": ["support:access"],

"sentiment-analysis": ["skills:invoke"] # Redundant but shows pattern

}

# Check if the requested service exists in our map

required*permissions := service*permissions[service]

# Ensure the agent has ALL permissions specified for this service

required*permissions*set := {p | some p in required*permissions}

agent*permissions*set := {p | some p in token.payload.permissions}

required*permissions*set & agent*permissions*set == required*permissions*set

}

## --- Contextual Rules: Environment-Specific ---

## Rule to enforce access based on the environment of the resource being accessed

## compared to the environment claim in the agent's token.

allow if {

token*valid

token*not*expired

# Get the resource environment (e.g., fetched by Lua filter from a registry)

resource*env := input.resource.metadata.environment

# Get the agent's environment claim from the JWT

agent*env := token.payload["https://mcp.example.com/environment"]

# Allow if the resource environment matches the agent's environment

resource*env == agent*env

}

## --- Contextual Rules: Team-Based ---

## Rule to enforce that agents can only access resources belonging to their team.

allow if {

token*valid

token*not*expired

# Get the agent's team claim from the JWT

agent*team := token.payload["https://mcp.example.com/team"]

# Get the resource team (e.g., fetched by Lua filter)

resource*team := input.resource.metadata.team

# Allow if the resource team matches the agent's team

agent*team == resource*team

}

## --- Deny Rule: Off-Hours Restrictions ---

## Define specific conditions that result in an explicit deny, overriding allow rules.

## This example denies certain operations during off-hours.

deny*reason := "Operation not permitted during off-hours" if {

# Get the current hour (UTC assumed for simplicity)

hour := time.clock(time.now*ns())[0]

# Define off-hours (e.g., before 9 AM or after 5 PM UTC)

hour < 9 or hour > 17

# Define high-risk HTTP methods

high*risk*methods := {"PUT", "POST", "DELETE", "PATCH"} # Use set for efficient lookup

# Check if the request method is considered high-risk

high*risk*methods[input.request.method]

# Optionally add other conditions, e.g., not a critical agent type

# token.payload["https://mcp.example.com/agent*type"] != "critical-incident-response"

}

## The final decision is 'allow' IF NOT 'deny' AND one of the 'allow' rules is true.

## The default 'allow := false' handles cases where no specific allow rule matches.

## The 'deny*reason' rule provides context when a deny occurs.

#### Request Context Structure (OPA Input)

For the OPA policy to evaluate correctly, Envoy needs to construct an appropriate input document based on the incoming request and extracted JWT claims. The policy expects a structure like this:

```json

{

"jwt": {

"valid": true, // Set by Envoy JWT filter

"payload": {

"sub": "client-id@clients",

"permissions": ["skills:invoke", "data:read"],

"https://mcp.example.com/agent*type": "support",

"https://mcp.example.com/team": "customer-success",

"https://mcp.example.com/environment": "production",

"exp": 1683030000 // JWT expiration timestamp (seconds since epoch)

}

},

"request": {

"method": "POST",

"path": ["", "mcp:", "sentiment-analysis", "invoke*skill"], // Path split into segments

"headers": {

"x-source-system": "support-portal",

"content-type": "application/json",

"authorization": "Bearer ..." # Note: Sensitive headers might be excluded in production

}

},

"resource": {

"type": "service", // Type of resource being accessed (e.g., service, data*source, tool)

"id": "sentiment-analysis", // Identifier of the resource

"metadata": {

# Contextual metadata about the resource, crucial for policy decisions

# In production, this metadata would ideally be fetched from a configuration

# registry or service catalog based on the resource ID (e.g., by the Lua filter).

"team": "ml",

"environment": "production",

"data*classification": "low"

}

}

}

Envoy uses filters in its HTTP connection manager to build this input.

#### Wiring it up in Envoy:

The Envoy configuration defines a chain of HTTP filters that process incoming requests before routing them to the upstream service.

```yaml

http*filters:

# 1. JWT Authentication Filter: Validates the incoming JWT.

# It extracts claims and stores them in request metadata.

- name: envoy.filters.http.jwt*authn

typed*config:

"@type": "type.googleapis.com/envoy.extensions.filters.http.jwt*authn.v3.JwtAuthentication"

providers:

auth0: # Name of the JWT provider configuration

issuer: "https://your-tenant.auth0.com/"

audiences: ["https://mcp.example.com/"]

forward: true # Pass the Authorization header upstream (optional, depends on downstream)

from*headers:

- name: "Authorization" # Look for the token in the Authorization header

value*prefix: "Bearer "

remote*jwks: # How to fetch public keys to verify the JWT signature

http*uri:

uri: "https://your-tenant.auth0.com/.well-known/jwks.json"

cluster: auth0*jwks # Envoy cluster pointing to Auth0's JWKS endpoint

timeout: 5s

cache*duration: # Cache the JWKS response

seconds: 300

# NOTE: For local demos with complex mocking, consider using:

# insecure*skip*signature*verification: true

# This is NOT secure for production.

rules:

# Apply this JWT validation rule only to requests starting with /mcp://

- match:

prefix: "/mcp://"

requires:

provider*name: "auth0" # This rule requires validation by the 'auth0' provider

# 2. Lua Filter (Pre-Authz): A small Lua script runs after JWT validation.

# Its job is to extract data (JWT claims, request path/headers) and

# format it into the JSON structure required by the OPA policy input.

# It then adds this OPA input as a request header for the ext*authz filter.

- name: envoy.filters.http.lua

typed*config:

"@type": "type.googleapis.com/envoy.extensions.filters.http.lua.v3.Lua"

inline*code: |

-- Load cjson library (ensure it's available in your Envoy build)

local cjson = require "cjson"

-- Called before request is sent to upstream

function envoy*on*request(request*handle)

-- Access the JWT payload stored in metadata by the jwt*authn filter

local metadata = request*handle:streamInfo():dynamicMetadata()

local jwt*payload = metadata:get("envoy.filters.http.jwt*authn")["jwt*payload"]

if not jwt*payload then

-- This should not happen if jwt*authn filter is configured correctly

-- and 'requires' rule matched, but good for safety.

request*handle:logWarn("JWT payload not found in metadata.")

return

end

-- Extract service name and split path for OPA input

local path = request*handle:headers():get(":path")

local path*parts = split*path(path)

-- Add agent identity headers for downstream services (useful for logging/auditing)

request*handle:headers():add("x-agent-id", jwt*payload["sub"])

request*handle:headers():add("x-agent-type", jwt*payload["https://mcp.example.com/agent*type"])

request*handle:headers():add("x-agent-team", jwt*payload["https://mcp.example.com/team"])

-- Build the OPA input document structure

local service*name = "unknown"

if #path*parts >= 2 then

service*name = path*parts[2] -- Service name is the second part after /mcp://

end

local opa*input = {

jwt = {

valid = true, -- JWT filter validated structural integrity

payload = jwt*payload

},

request = {

method = request*handle:headers():get(":method"),

path = path*parts, -- Pass split path

headers = extract*headers(request*handle) -- Extract relevant headers

},

resource = {

type = "service", -- Type of the entity being accessed

id = service*name,

metadata = service*metadata(service*name) -- Fetch/derive resource metadata

}

}

-- Encode the OPA input as JSON and add it as a header.

-- The ext*authz filter is configured to read this specific header.

request*handle:headers():add("x-opa-input", cjson.encode(opa*input))

end

-- Helper functions for Lua script

function split*path(path)

local parts = {}

-- Simple split by '/', improved to handle leading/trailing slashes better

for part in string.gmatch(path or "", "([^/]+)") do

table.insert(parts, part)

end

-- Handle the root case or mcp:// alone

if path == "/" then return {""} end

if #parts == 0 and string.sub(path, 1, 1) == "/" then return {""} end

-- Add leading empty string to match OPA example structure if path starts with '/'

if string.sub(path, 1, 1) == "/" then table.insert(parts, 1, "") end

return parts

end

function extract*headers(handle)

local headers = {}

-- Example: Only extract a few specific headers. Avoid sensitive ones.

headers["x-source-system"] = handle:headers():get("x-source-system")

headers["content-type"] = handle:headers():get("content-type")

-- In a real system, carefully curate which headers are sent to OPA

return headers

end

function service*metadata(service)

-- In a production environment, this metadata (team, environment, etc.)

-- would be dynamically fetched from a centralized service registry,

-- config service, or database based on the 'service' identifier.

-- For this example, we use a hardcoded map.

local metadata*map = {

["hello-skill"] = { team = "platform", environment = "production", data*classification = "low" },

["sentiment-analysis"] = { team = "ml", environment = "production", data*classification = "low" },

["customer-lookup"] = { team = "customer-success", environment = "production", data*classification = "medium" },

["support-system"] = { team = "customer-success", environment = "production", data*classification = "medium" }

-- Add metadata for other services...

}

-- Return metadata if found, or default unknown metadata

return metadata*map[service] or { team = "unknown", environment = "unknown", data*classification = "unknown" }

end

-- Called after response is received from upstream (useful for observability headers)

function envoy*on*response(response*handle)

-- Add trace ID for debugging/correlation

response*handle:headers():add("x-mcp-trace-id", response*handle:streamInfo():requestId())

end

# 3. External Authorization (ExtAuthz) Filter: Sends the OPA input to OPA

# and enforces the decision returned by OPA.

- name: envoy.filters.http.ext*authz

typed*config:

"@type": "type.googleapis.com/envoy.extensions.filters.http.ext*authz.v3.ExtAuthz"

failure*mode*allow: false # If OPA is down, deny requests. Crucial for security.

http*service:

server*uri: # Configure how to connect to the OPA server

uri: http://opa:8181/v1/data/mcp/authz # OPA's API endpoint for our policy

cluster: opa # Envoy cluster pointing to the OPA service

timeout: 0.5s # Timeout for the OPA authorization query

authorization*request:

# Configure which headers from the original request are sent to OPA.

# We only need the 'x-opa-input' header prepared by the Lua filter.

allowed*headers:

patterns:

- exact: "x-opa-input"

authorization*response:

# Configure which headers returned by OPA (if any) are allowed to be

# added to the request sent upstream or the response sent downstream.

# Useful for OPA adding context, e.g., user ID.

allowed*upstream*headers:

patterns:

- exact: "x-agent-id" # Allow the agent ID header added by Lua

- exact: "x-agent-type" # Allow the agent type header added by Lua

- exact: "x-agent-team" # Allow the agent team header added by Lua

- prefix: "x-mcp-" # Allow any custom headers OPA or Lua might add starting with x-mcp-

# 4. Lua Filter (Post-Authorization Logic / Request ID Injection): <-- Renamed/Clarified Purpose

# This filter runs *after* successful authorization.

# Its primary role here is demonstration, like adding a request ID.

# In a real scenario, complex post-auth logic might live here, but

# *secret injection* should happen via mechanisms like Vault Agent,

# *not* by adding secret headers here due to security risks.

- name: envoy.filters.http.lua

typed*config:

"@type": "type.googleapis.com/envoy.extensions.filters.http.lua.v3.Lua"

inline*code: |

-- Called before request is sent to upstream, after ext*authz

function envoy*on*request(request*handle)

-- Example: Add Envoy's unique request ID to the request going upstream

request*handle:headers():add("x-request-id", request*handle:streamInfo():requestId())

-- Mock header indicating auth passed and secrets *could* be available

-- (but are actually injected via Vault Agent, not here)

request*handle:headers():add("x-mcp-secrets-available", "true")

end

# 5. Router Filter: The final filter, responsible for routing the request

# to the correct upstream cluster based on the route configuration.

- name: envoy.filters.http.router

typed*config:

"@type": "type.googleapis.com/envoy.extensions.filters.http.router.v3.Router"

#### Understanding the `mcp://` Scheme

You'll notice routes configured with `prefix: "/mcp://"`. The Python SDK also uses URLs like `mcp://skill-name/path`.

**Crucially, `mcp://` is *not* a new global internet protocol.** It's a local **convention** used *within this specific sidecar architecture*.

1. The **Agent SDK** constructs URLs starting with `mcp://<service-name>/<path>` (e.g., `mcp://sentiment-analysis/analyze`).

1. The **Agent** sends this HTTP request (via the SDK) to its **local Envoy sidecar** (`http://127.0.0.1:15000`).

1. The **Envoy sidecar** receives the request. Its `route*config` contains a route matching the `/mcp://` prefix.

1. This route uses a `regex*rewrite` to remove the `/mcp://<service-name>` part from the path, leaving only the original path (`/analyze` in the example).

1. More importantly, the route configuration uses the `<service-name>` part from the original path (`sentiment-analysis`) to dynamically select the correct **upstream cluster**. The cluster name is typically derived directly from the service name (e.g., the `sentiment-analysis` cluster).

1. The request is then routed to the selected upstream cluster with the rewritten path (`/analyze`).

*(Note: The Envoy configuration snippet above shows the `http*filters` but **omits the `route*config` block for brevity**. A complete `envoy.yaml` would require a `route*config` section defining the virtual hosts, routes matching `/mcp://...`, the `regex*rewrite` logic, and the dynamic cluster selection based on the path.)*

This pattern provides a simple abstraction for the agent: it just needs to know the logical name of the service (`sentiment-analysis`), and the sidecar handles finding and routing to the actual network location of that service.

#### Deployment and Bundle Management

OPA policies should be treated like code – versioned, tested, and deployed through CI/CD pipelines. OPA can be configured to pull policy bundles from a remote HTTP server, S3 bucket, or other sources.

```yaml

## opa-bundler.yaml - Example OPA configuration for fetching bundles

bundles:

authz: # Name of the bundle

service: bundles # Reference to the service configuration below

resource: bundles/authz # Path on the bundle server where the bundle is located

persist: true # Store the bundle on disk

polling: # Configure how often OPA checks for updates

min*delay*seconds: 60

max*delay*seconds: 120

services:

bundles: # Configuration for the bundle server

url: https://opa-bundles.example.com # URL where bundles are hosted

credentials: # Authentication for the bundle server (e.g., Bearer token)

bearer:

token*file: /var/run/secrets/bundle-auth-token # File containing the token

plugins:

envoy*ext*authz*grpc: # If using gRPC ext*authz (alternative to HTTP)

addr: :9191

path: mcp/authz/allow # The policy decision path OPA should expose via gRPC

dry-run: false

enable-reflection: false

For policy testing, create a suite of test cases in Rego:

```rego

package mcp.authz*test # Define a test package

import data.mcp.authz # Import the policy we are testing

## Helper timestamps for tests

future*timestamp := time.now*ns() / 1000000000 + 3600 # 1 hour in the future (seconds)

past*timestamp := time.now*ns() / 1000000000 - 3600 # 1 hour in the past (seconds)

## Test case: Allow request with valid token and required permission

test*allow*valid*token*with*permission {

allow*result := authz.allow with input as { # Evaluate authz.allow rule with this input

"jwt": {

"valid": true,

"payload": {

"permissions": ["skills:invoke"], # Agent has invoke permission

"exp": future*timestamp # Token is not expired

}

},

"request": {

"method": "POST",

"path": ["", "mcp:", "sentiment-analysis", "invoke*skill"] # Requesting an action that needs skills:invoke

},

"resource": {

"id": "sentiment-analysis",

"metadata": {

"team": "ml", # Example metadata

"environment": "production"

}

}

}

allow*result == true # Expect the result to be true

}

## Test case: Deny request with an expired token

test*deny*expired*token {

allow*result := authz.allow with input as {

"jwt": {

"valid": true,

"payload": {

"permissions": ["skills:invoke"],

"exp": past*timestamp # Token is expired

}

},

"request": {

"method": "POST",

"path": ["", "mcp:", "sentiment-analysis", "invoke*skill"]

},

"resource": {

"id": "sentiment-analysis",

"metadata": {

"team": "ml",

"environment": "production"

}

}

}

allow*result == false # Expect the result to be false

}

## Add more tests covering:

## - Missing permission

## - Service-specific permission check failure / success

## - Environment mismatch / match

## - Team mismatch / match

## - Off-hours deny rule triggers

## - Off-hours deny rule does NOT trigger for allowed operations/times

## - Default deny when no rules match

### 3.3 Secrets with Vault

HashiCorp Vault provides a secure, centralized secrets management system with powerful features like dynamic secrets, leasing, and fine-grained access control based on identity.

#### Setup JWT Auth Backend

Vault can be configured to authenticate users or machines using JWTs issued by trusted identity providers like Auth0.

```hcl

## Enable and configure the JWT auth backend to trust Auth0

resource "vault*jwt*auth*backend" "auth0" {

path = "jwt" # Mount the auth method at /auth/jwt

default*role = "agent" # Assign a default role if not specified in login

jwks*url = "https://your-tenant.auth0.com/.well-known/jwks.json" # Vault fetches Auth0's public keys here

# jwt*validation*pubkeys = [] # Alternative to jwks*url for static keys

bound*issuer = "https://your-tenant.auth0.com/" # Validate the 'iss' claim in the JWT

}

## Define a role that maps properties from the JWT to Vault policies and TTLs

resource "vault*jwt*auth*backend*role" "agent" {

backend = vault*jwt*auth*backend.auth0.path

role*name = "agent"

role*type = "jwt" # This role is for JWT authentication

bound*audiences = ["https://mcp.example.com/"] # Validate the 'aud' claim

bound*claims = { # Validate specific claims in the JWT payload

"https://mcp.example.com/environment" = "production" # Only allow agents from production env

}

bound*claims*type = "string" # Type of bound*claims values

user*claim = "sub" # Use the JWT 'sub' claim as the Vault identity name

token*ttl = 600 # Vault tokens issued have a default TTL of 10 minutes

token*max*ttl = 1200 # Max TTL of 20 minutes

token*policies = ["agent-base"] # Assign the 'agent-base' Vault policy to tokens issued via this role

}

## Define a Vault policy that grants permissions to paths based on identity metadata

resource "vault*policy" "agent*base" {

name = "agent-base"

policy = <<EOF

## Base access for all agents

path "secret/data/mcp/agents/common/*" {

capabilities = ["read"] # Allow reading secrets in the common path

}

## Team-specific access: Use identity metadata derived from JWT claims

## {{identity.entity.metadata.team}} will be substituted by Vault

path "secret/data/mcp/agents/teams/{{identity.entity.metadata.team}}/*" {

capabilities = ["read"]

}

## Agent-specific access: Use the entity name derived from the JWT 'sub' claim

## {{identity.entity.name}} will be substituted by Vault

path "secret/data/mcp/agents/{{identity.entity.name}}/*" {

capabilities = ["read"]

}

## Dynamic credentials access: Allow reading dynamic database credentials based on agent type

## This assumes a database secret backend configured with roles like 'support-readonly', 'analysis-readonly'

path "database/creds/{{identity.entity.metadata.agent*type}}-readonly" {

capabilities = ["read"]

}

## Allow agents to create short-lived, restricted tokens for downstream services (if needed)

path "auth/token/create/service" {

capabilities = ["update"] # The 'create' operation is an 'update' capability on the /auth/token path

allowed*parameters = { # Restrict parameters the agent can set when creating tokens

"policies" = ["service-policy"] # Only allow assigning the 'service-policy'

"ttl" = ["5m", "10m"] # Only allow TTLs of 5 or 10 minutes

}

}

EOF

}

## Create Vault Identity entities and aliases to link external identities (like JWT 'sub')

## to internal Vault identities and metadata. This allows policies to use identity metadata.

resource "vault*identity*entity" "agent" {

# NOTE: Ensure this name matches how the agent is identified internally in Vault,

# often derived from the 'user*claim' (sub) in the JWT role.

name = "support-triage-agent" # Example name, might need adjustment based on actual 'sub' claim format

metadata = { # Store metadata about the agent, e.g., from Auth0 custom claims

team = "customer-success"

agent*type = "support"

environment = "production"

}

}

## Link the Auth0 client ID (from JWT 'sub') to the Vault Identity entity

resource "vault*identity*entity*alias" "agent*jwt" {

# NOTE: Ensure this name *exactly* matches the 'sub' claim in the JWT tokens being used.

# For Auth0 M2M clients, this is typically client*id@clients format. Adjust if needed.

name = auth0*client.agent*app.id # Assumes 'sub' claim is the Auth0 client*id@clients

canonical*id = vault*identity*entity.agent.id # Link to the Identity entity created above

mount*accessor = vault*jwt*auth*backend.auth0.accessor # Link to the JWT auth backend mount

}

#### Dynamic Database Credentials

For agents that need database access, Vault can generate dynamic, short-lived credentials on demand.

```hcl

## Configure a PostgreSQL secrets backend

resource "vault*database*secret*backend" "postgres" {

path = "database" # Mount the backend at /database

# Configure a specific PostgreSQL connection

postgresql {

name = "customer-db"

plugin*name = "postgresql-database-plugin"

# Connection string using a Vault admin user

connection*url = "postgresql://{{username}}:{{password}}@db:5432/customer?sslmode=disable"

allowed*roles = ["support-readonly", "analysis-readonly"] # Roles that can use this connection

username = "vault"

password = var.db*admin*password # Vault admin password (managed securely)

}

}

## Define a role that specifies how to create credentials for a specific use case

resource "vault*database*secret*backend*role" "support*readonly" {

backend = vault*database*secret*backend.postgres.path

name = "support-readonly" # Role name (used in policies and lookup paths)

db*name = "customer-db" # Reference the database connection configured above

# SQL statements Vault will execute to create a user and grant permissions

creation*statements = [

"CREATE ROLE \"{{name}}\" WITH LOGIN PASSWORD '{{password}}' VALID UNTIL '{{expiration}}';",

"GRANT SELECT ON ALL TABLES IN SCHEMA public TO \"{{name}}\";",

"ALTER DEFAULT PRIVILEGES IN SCHEMA public GRANT SELECT ON TABLES TO \"{{name}}\";",

"GRANT USAGE ON SCHEMA public TO \"{{name}}\";"

]

default*ttl = 300 # Credentials valid for 5 minutes by default

max*ttl = 600 # Max validity of 10 minutes

}

#### Agent Access Flow:

1. The agent obtains a JWT from Auth0 (or has a SPIFFE identity).

1. The agent (or more commonly, a **Vault Agent sidecar/injector**) presents the JWT to Vault's `/auth/jwt/login` endpoint (or uses its SPIFFE ID for mTLS auth).

1. Vault validates the JWT (or SPIFFE ID), matches it to a Vault Identity Entity via an Alias, and issues a short-lived Vault token based on the configured Role (`agent`) and the Entity's policies (`agent-base`). The Vault token is bound to this specific agent's identity and metadata.

1. The agent (or Vault Agent) uses this Vault token to read static secrets (e.g., `secret/data/mcp/agents/common/api-keys`) or request dynamic credentials (e.g., `database/creds/support-readonly`).

1. Secrets/credentials obtained via Vault tokens are short-lived and automatically rotated by Vault when their lease expires.

```python

## Example Python snippets showing Vault interaction

## *** NB: This code is for illustration only. In practice, the Vault Agent sidecar

## handles token fetching and secret injection, making secrets available as files or env vars.

## The application code itself usually does NOT directly interact with the Vault API. ***

import requests

def fetch*vault*token(jwt*token, vault*addr="http://vault:8200"):

"""Exchange JWT for a Vault token. (Usually done by Vault Agent)"""

resp = requests.post(

f"{vault*addr}/v1/auth/jwt/login",

json={"jwt": jwt*token, "role": "agent"} # Use the 'agent' role

)

resp.raise*for*status()

# The client*token returned here has policies bound based on the agent's identity

return resp.json()["auth"]["client*token"]

def get*db*credentials(vault*token, vault*addr="http://vault:8200"):

"""Fetch dynamic DB credentials using a Vault token. (Usually done by Vault Agent)"""

headers = {"X-Vault-Token": vault*token}

resp = requests.get(

f"{vault*addr}/v1/database/creds/support-readonly", # Use the dynamic role path

headers=headers

)

resp.raise*for*status()

# This returns username/password valid for a short lease

return resp.json()["data"]

def get*static*secret(vault*token, path, vault*addr="http://vault:8200"):

"""Fetch a static secret using a Vault token. (Usually done by Vault Agent)"""

headers = {"X-Vault-Token": vault*token}

# Construct the full path for KV v2

full*path = f"{vault*addr}/v1/secret/data/{path}"

resp = requests.get(full*path, headers=headers)

resp.raise*for*status()

# Returns the secret data (KV v2 nests under 'data')

return resp.json()["data"]["data"]

## In a real deployment, fetching and managing Vault tokens and secrets

## is best handled by the Vault Agent sidecar or the Vault Agent Injector

## Kubernetes controller, which makes secrets available as files or env vars

## to the application container automatically and securely.

#### Secret Injection Sidecar (Vault Agent)

Vault Agent is a lightweight process that can run alongside your application (as a sidecar container in Kubernetes). It's configured to:

1. Authenticate to Vault using various methods (like the JWT from your agent's service account or a projected volume).

1. Obtain and automatically renew Vault tokens.

1. Fetch secrets (static or dynamic) using the acquired token.

1. Render secrets into files using Consul-Template syntax, or inject them as environment variables.

1. Automatically rotate the rendered secrets when the lease expires, and optionally signal the application to reload.

This is the **most secure and developer-friendly** way to handle secrets, as the agent application doesn't need to know anything about fetching tokens or secrets from Vault directly.

```hcl

## Example Vault Agent configuration (config.hcl) placed in the agent's container

## pid*file = "/home/vault/pidfile" # Optional: path for PID file

## exit*after*auth = false # Keep agent running to manage renewals and templates

## Define how Vault Agent authenticates to Vault

auto*auth {

method "jwt" {

# mount*path = "auth/jwt" # Defaults to auth/<type> if not set

# Provide config details for the JWT auth method

config = {

role = "agent" # The Vault role defined for agents

# How the agent obtains the JWT. For K8s, use service account token projection:

jwt = { from*file = "/var/run/secrets/kubernetes.io/serviceaccount/token" }

# Or if agent gets JWT from Auth0 itself and writes to a file:

# jwt = { from*file = "/path/to/auth0-jwt.token" }

}

}

# Define where the obtained Vault token is stored (e.g., for other tools or debugging)

sink "file" {

# wrap*ttl = "5m" # Optional: wrap the token

# aad*env*var = "AAD*ENV*VAR" # Optional: Azure AAD

config = {

path = "/home/vault/.vault-token" # Write the token to a file

mode = 0600 # Restrict permissions

}

}

}

## cache block configures token caching behavior (optional but recommended)

cache {

use*auto*auth*token = true # Use the token obtained by auto*auth

}

## listener block can expose Vault Agent's proxy features (optional)

## listener "tcp" {

## address = "127.0.0.1:8100"

## tls*disable = true

## }

## template block defines which secrets to fetch and how to render them

template {

# Source Consul Template file path (within the container)

source = "/vault/agent/templates/credentials.ctmpl"

# Destination file path where the rendered secret will be written

destination = "/etc/app/config/agent*credentials.json"

perms = "0400" # File permissions (read-only for owner)

# Optional command to run after the file is updated (e.g., signal app to reload config)

# command = "kill -HUP <pid>" or "/app/reload*config.sh"

}

template {

source = "/vault/agent/templates/db*creds.ctmpl"

destination = "/etc/app/config/db*credentials.json"

perms = "0400"

# command = "..." # Signal app if needed

}

## --- Example Consul-Template syntax for the source template files ---

## /vault/agent/templates/credentials.ctmpl

## Fetches a static secret from KV v2 engine mounted at 'secret/'

{{ `{{ with secret "secret/data/mcp/agents/demo-agent/credentials" }}` }}

{

"api*key": "{{ `{{ .Data.data.api*key }}` }}",

"environment": "{{ `{{ .Data.data.environment }}` }}"

}

{{ `{{ end }}` }}

## /vault/agent/templates/db*creds.ctmpl

## Fetches dynamic database credentials

{{ `{{ with secret "database/creds/support-readonly" }}` }}

{

"db": {

"host": "customer-db.internal", # Use internal DNS name

"port": 5432,

"username": "{{ `{{ .Data.username }}` }}",

"password": "{{ `{{ .Data.password }}` }}",

"database": "customer",

"lease*duration": "{{ `{{ .LeaseDuration }}` }}" # Expose lease duration if useful

}

}

{{ `{{ end }}` }}

*(Note: The double curly braces `{{` `}}` are escaped as `{{"{{"}}` `{{ "}}" }}` in the markdown block above because the outer markdown processor might interpret them. In the actual `.ctmpl` files, you would use single braces like `{{ with secret ... }}` and `{{ .Data.data.api*key }}`.)*

This approach ensures:

- Credentials are not hardcoded or passed directly to the application process.

- Rotation happens automatically before expiry, managed by Vault Agent.

- The application only needs to read from the rendered files (or environment variables if using `envtemplate`). Minimal changes are required for secret rotation if the app can reload configuration dynamically or is signaled.

## 4. Agent SDK (Python)

To make agent development easy, provide an SDK that abstracts away the complexity of token management (if not handled externally), calling the sidecar, and potentially interacting with other control plane features.

```python

## mcp*agent.py

import os

import time

import json

import logging

import threading

import requests

import re # Needed for workflow template parsing

import random # Needed for jitter

from typing import Dict, Any, Optional, List, Union

from datetime import datetime # Needed for hello-skill example

class MCPAgentError(Exception):

"""Base exception for MCP Agent SDK errors."""

pass

class AuthenticationError(MCPAgentError):

"""Error during authentication or token refresh."""

pass

class RequestError(MCPAgentError):

"""Error during HTTP request via sidecar."""

pass

class MCPAgent:

"""Machine Control Plane Agent SDK

Provides methods to interact with skills and data sources via the local MCP sidecar proxy.

Handles token acquisition and refresh using Auth0 Client Credentials flow if configured,

otherwise assumes authentication is handled externally (e.g., mTLS, Vault Agent).

"""

def _*init**(

self,

client*id: str = None,

client*secret: str = None,

auth0*domain: str = None,

audience: str = "https://mcp.example.com/",

proxy*url: str = "http://127.0.0.1:15000", # URL of the Envoy sidecar

token*refresh*margin: int = 300, # Refresh token 5 minutes before expiry

logger: logging.Logger = None,

# Allow providing token externally (e.g. if Vault Agent writes it to a file/env var)

token*provider: callable = None

):

"""Initialize the MCP Agent SDK.

Args:

client*id: Auth0 Client ID (defaults to MCP*CLIENT*ID env var). Required for Auth0 flow.

client*secret: Auth0 Client Secret (defaults to MCP*CLIENT*SECRET env var). Required for Auth0 flow.

auth0*domain: Auth0 domain (defaults to AUTH0*DOMAIN env var). Required for Auth0 flow.

audience: API audience (defaults to https://mcp.example.com/).

proxy*url: URL of the Envoy sidecar (defaults to http://127.0.0.1:15000).

All agent traffic goes through this local proxy.

token*refresh*margin: Seconds before expiry to refresh token (used only with Auth0 flow).

logger: Custom logger (defaults to standard logging).

token*provider: Optional callable function that returns the current Bearer token string.

If provided, the internal Auth0 flow is disabled.

"""

self.client*id = client*id or os.environ.get("MCP*CLIENT*ID")

self.client*secret = client*secret or os.environ.get("MCP*CLIENT*SECRET")

self.auth0*domain = auth0*domain or os.environ.get("AUTH0*DOMAIN")

self.audience = audience

self.proxy*url = proxy*url

self.token*refresh*margin = token*refresh*margin

self.logger = logger or logging.getLogger("mcp*agent")

self.token*provider = token*provider

# Token state management (only used if token*provider is NOT set)

self.*access*token: Optional[str] = None

self.*token*expiry: float = 0.0

self.*token*lock = threading.RLock() # Protect token access

self.*refresh*thread: Optional[threading.Thread] = None

self.*running = True

self.*can*fetch*token*internally = False

if self.token*provider:

self.logger.info("Using external token provider. Internal Auth0 token fetching disabled.")

elif all([self.client*id, self.client*secret, self.auth0*domain]):

self.logger.info("Using internal Auth0 Client Credentials flow for token management.")

self.*can*fetch*token*internally = True

# Initial token fetch

try:

self.*fetch*token()

# Start token refresh thread

self.*refresh*thread = threading.Thread(

target=self.*token*refresh*loop,

daemon=True # Thread exits when main program exits

)

self.*refresh*thread.start()

except AuthenticationError as e:

self.logger.error(f"Initial token fetch failed: {e}")

# Treat initial fetch failure as fatal if SDK is managing tokens

raise

else:

self.logger.warning(

"No external token provider and missing Auth0 credentials. "

"SDK will not add Authorization headers. Ensure auth is handled by sidecar (e.g., mTLS)."

)

def *fetch*token(self) -> None:

"""Internal method to fetch a new access token from Auth0."""

if not self.*can*fetch*token*internally:

# This should not be called if using external provider or no creds

self.logger.error("Internal error: *fetch*token called unexpectedly.")

return

self.logger.debug("Fetching new token from Auth0...")

try:

response = requests.post(

f"https://{self.auth0*domain}/oauth/token",

json={

"grant*type": "client*credentials",

"client*id": self.client*id,

"client*secret": self.client*secret,

"audience": self.audience

},

timeout=10

)

response.raise*for*status() # Raise HTTPError for bad responses (4xx or 5xx)

token*data = response.json()

with self.*token*lock:

self.*access*token = token*data["access*token"]

# Calculate expiry time from 'expires*in' (seconds until expiry)

self.*token*expiry = time.time() + token*data["expires*in"]

self.logger.info(

f"New token acquired via internal fetch, expires at: "

f"{datetime.fromtimestamp(self.*token*expiry).isoformat()}"

)

except requests.exceptions.RequestException as e:

self.logger.error(f"Failed to fetch token from Auth0: {str(e)}")

raise AuthenticationError(f"Auth0 token request failed: {e}") from e

except (KeyError, json.JSONDecodeError) as e:

self.logger.error(f"Failed to parse token response from Auth0: {str(e)}")

raise AuthenticationError(f"Invalid token response from Auth0: {e}") from e

def *token*refresh*loop(self) -> None:

"""Background thread to refresh the token before it expires (internal Auth0 flow only)."""

while self.*running:

sleep*duration = 60 # Default check interval

try:

with self.*token*lock:

if not self.*access*token: # Should have token if thread is running

self.logger.warning("Token refresh loop running without initial token. Attempting fetch.")

self.*fetch*token() # Try to recover

continue

time*to*expiry = self.*token*expiry - time.time()

# Refresh if within the margin or if already expired

if time*to*expiry < self.token*refresh*margin:

self.logger.info(f"Token expiring soon ({time*to*expiry:.0f}s remaining), refreshing...")

try:

self.*fetch*token()

# After successful refresh, recalculate sleep time based on new expiry

with self.*token*lock:

time*to*expiry = self.*token*expiry - time.time()

except AuthenticationError as e:

self.logger.error(f"Token refresh failed: {e}. Retrying in 60s.")

# Back off and retry later on failure

sleep*duration = 60

# Keep using the old token for now, hoping it's still valid for a bit

else:

# Determine sleep duration: aim to refresh near the margin.

# Sleep for slightly less than the time until the refresh margin is hit.

sleep*duration = max(10, time*to*expiry - self.token*refresh*margin - 10)

# Clamp sleep duration to a reasonable maximum (e.g., 15 minutes)

sleep*duration = min(sleep*duration, 900)

except Exception as e:

# Catch unexpected errors in the refresh loop itself

self.logger.error(f"Unexpected error in token refresh loop: {e}", exc*info=True)

sleep*duration = 60 # Wait before retrying

self.logger.debug(f"Next token refresh check in {sleep*duration:.0f} seconds.")

time.sleep(sleep*duration)

def get*token(self) -> Optional[str]:

"""Get the current valid access token."""

if self.token*provider:

try:

token = self.token*provider()

if not token:

self.logger.warning("External token provider returned empty token.")

return token

except Exception as e:

self.logger.error(f"External token provider failed: {e}")

raise AuthenticationError("Failed to get token from external provider") from e

elif self.*can*fetch*token*internally:

with self.*token*lock:

# Check if token needs refreshing NOW (e.g., if called right before expiry

# and background thread hasn't run yet)

if time.time() > self.*token*expiry - 10: # Small buffer

if self.*running: # Avoid refresh if shutting down

self.logger.warning("Token called very close to expiry or expired, attempting synchronous refresh.")

try:

self.*fetch*token() # Attempt synchronous refresh

except AuthenticationError as e:

self.logger.error(f"Synchronous token refresh failed: {e}")

# Decide: return potentially expired token or raise? Raising is safer.

raise

else:

self.logger.warning("SDK closing, returning potentially expired token.")

# Return the current (possibly just refreshed) token

return self.*access*token

else:

# No token provider and no internal fetching configured

self.logger.debug("No token management configured; returning None.")

return None

def *make*request(self, method: str, url: str, **kwargs) -> requests.Response:

"""Helper to make HTTP requests via the sidecar proxy."""

headers = kwargs.pop("headers", {})

token = self.get*token() # Get current token (refreshes if needed, handles different providers)

if token:

# Ensure we don't override an explicitly provided Authorization header

if "Authorization" not in headers:

headers["Authorization"] = f"Bearer {token}"

elif "Authorization" not in headers:

self.logger.debug("No token available and Authorization header not provided.")

# Proceed without Authorization header if no token management is active

# Requests always go to the local sidecar proxy, with the mcp:// path

full*url = f"{self.proxy*url}{url}"

# Basic retry logic for transient network issues or specific status codes

retry*count = kwargs.pop("retry*count", 2)

timeout = kwargs.pop("timeout", 30)

retry*statuses = {502, 503, 504} # Retry on these gateway/timeout errors

needs*auth*retry = False

for attempt in range(retry*count + 1):

try:

self.logger.debug(f"Request Attempt {attempt+1}: {method} {full*url}")

response = requests.request(

method,

full*url,

headers=headers,

timeout=timeout,

**kwargs # Pass remaining kwargs (json, params, etc.)

)

# Check for 401 Unauthorized - potentially needs token refresh

if response.status*code == 401 and self.*can*fetch*token*internally and attempt < retry*count:

self.logger.warning("Received 401 Unauthorized. Attempting token refresh and retry.")

needs*auth*retry = True # Signal to refresh token *after* this block

# Don't retry immediately, let the loop handle potential backoff

# Check for other retryable status codes

elif response.status*code in retry*statuses and attempt < retry*count:

self.logger.warning(f"Received {response.status*code}. Retrying...")

# Fall through to backoff and retry logic

else:

# Success or non-retryable error

response.raise*for*status() # Raise HTTPError for non-retryable 4xx/5xx

return response

except requests.exceptions.Timeout as e:

if attempt < retry*count:

self.logger.warning(f"Request timed out ({method} {full*url}), retrying ({attempt+1}/{retry*count}): {e}")

else:

self.logger.error(f"Request timed out after {retry*count} retries ({method} {full*url}): {e}")

raise RequestError(f"Request timed out after retries: {e}") from e

except requests.exceptions.ConnectionError as e:

if attempt < retry*count:

self.logger.warning(f"Connection error ({method} {full*url}), retrying ({attempt+1}/{retry*count}): {e}")

else:

self.logger.error(f"Connection error after {retry*count} retries ({method} {full*url}): {e}")

raise RequestError(f"Connection error after retries: {e}") from e

except requests.exceptions.RequestException as e:

# Catch other request exceptions (could be non-retryable)

self.logger.error(f"Request failed ({method} {full*url}): {e}")

raise RequestError(f"Request failed: {e}") from e

# --- Retry Logic ---

if attempt < retry*count:

# Refresh token if we got a 401

if needs*auth*retry:

try:

self.*fetch*token() # Synchronous token refresh

token = self.*access*token # Get the new token

headers["Authorization"] = f"Bearer {token}" # Update headers for next attempt

needs*auth*retry = False # Reset flag

self.logger.info("Token refreshed successfully after 401.")

except AuthenticationError as auth*e:

self.logger.error(f"Token refresh failed during 401 retry attempt: {auth*e}")

# If refresh fails, we can't succeed. Re-raise the original 401 error.

response.raise*for*status() # Raise the original 401

# Exponential backoff with jitter

backoff*time = (2 ** attempt) + random.uniform(0, 1)

self.logger.info(f"Waiting {backoff*time:.2f}s before retry {attempt+2}...")

time.sleep(backoff*time)

continue # Continue to the next attempt

else:

# If retries exhausted, raise the last response's error

response.raise*for*status()

# Should not be reachable if retries are configured > 0

raise RequestError("Request failed after exhausting retries.")

def call*skill(

self,

skill*name: str,

path: str, # Path on the skill's API (e.g., /analyze)

payload: Optional[Dict[str, Any]] = None,

method: str = "POST", # Allow specifying method

params: Optional[Dict[str, str]] = None,

timeout: int = 30,

retry*count: int = 2,

**kwargs # Allow passing other requests options

) -> Any: # Return type depends on response content-type

"""Call a skill or service through the MCP sidecar.

Constructs the mcp:// URL and handles auth/retries via the sidecar.

Args:

skill*name: The logical name of the target skill/service.

path: The API path on the target service (e.g., '/analyze'). Should start with '/'.

payload: JSON serializable data for POST/PUT/PATCH request body.

method: HTTP method (POST, GET, PUT, DELETE, etc.). Defaults to POST.

params: Dictionary of query string parameters for GET requests.

timeout: Request timeout in seconds.

retry*count: Number of retries on transient errors.

**kwargs: Additional keyword arguments passed to requests.request().

Returns:

Parsed JSON response if Content-Type is application/json, otherwise raw response text.

Raises:

RequestError: If the request fails after retries.

AuthenticationError: If token acquisition fails.

"""

if not path.startswith('/'):

path = '/' + path # Ensure leading slash for consistency

# Construct the mcp:// URL for the sidecar

mcp*url = f"/mcp://{skill*name}{path}"

self.logger.debug(f"Calling {method} {mcp*url}")

try:

response = self.*make*request(

method.upper(),

mcp*url,

json=payload if method.upper() in ["POST", "PUT", "PATCH"] else None,

params=params if method.upper() == "GET" else None,

timeout=timeout,

retry*count=retry*count,

**kwargs

)

# Attempt to parse JSON if response indicates it

content*type = response.headers.get("content-type", "").lower()

if "application/json" in content*type:

try:

return response.json()

except json.JSONDecodeError:

self.logger.warning(f"Failed to decode JSON response from {mcp*url}, returning raw text.")

return response.text

else:

# Return raw text for non-JSON responses

return response.text

except requests.exceptions.HTTPError as e:

# Catch HTTP errors raised by *make*request -> response.raise*for*status()

self.logger.error(f"{method} {mcp*url} failed with status {e.response.status*code}: {e.response.text}")

raise RequestError(f"Request failed: {e.response.status*code} - {e.response.text}") from e

# Other exceptions (Timeout, ConnectionError, AuthenticationError) are raised directly by *make*request

# --- Convenience Methods ---

def get*data(

self,

data*source: str,

path: str, # Path on the data source's API (e.g., /customers/123)

params: Optional[Dict[str, str]] = None,

timeout: int = 30,

retry*count: int = 2,

**kwargs

) -> Any:

"""Read data from a registered data source (convenience for GET requests)."""

return self.call*skill(

skill*name=data*source,

path=path,

method="GET",

params=params,

timeout=timeout,

retry*count=retry*count,

**kwargs

)

def write*data(

self,

data*sink: str,

path: str, # Path on the data sink's API (e.g., /tickets/456/status)

payload: Dict[str, Any], # Data to write (JSON body)

method: str = "PUT", # Default to PUT, allow POST/PATCH via method param

timeout: int = 30,

retry*count: int = 2,

**kwargs

) -> Any:

"""Write data to a registered data sink (convenience for PUT/POST requests)."""

return self.call*skill(

skill*name=data*sink,

path=path,

method=method,

payload=payload,

timeout=timeout,

retry*count=retry*count,

**kwargs

)

# --- Declarative Workflow Execution (Conceptual Sketch within SDK) ---

# NOTE: This remains a *very basic* sketch to illustrate how the SDK *could* be used

# by a workflow engine. A real engine would be a separate, more complex component.

def run*workflow*sketch(

self,

workflow*definition: Dict[str, Any], # Parsed YAML/JSON workflow

initial*context: Optional[Dict[str, Any]] = None

) -> Dict[str, Any]:

"""Execute a simple, sequential workflow defined declaratively (Basic Sketch)."""

context = initial*context or {}

outputs = {} # Store outputs mapped by step name

self.logger.info(f"Starting workflow sketch: {workflow*definition.get('name', 'Unnamed Workflow')}")

for i, step in enumerate(workflow*definition.get("steps", [])):

step*name = step.get("name", f"step-{i+1}")

skill*name = step.get("skill")

path = step.get("path")

method = step.get("method", "POST").upper()

if not skill*name or not path:

self.logger.error(f"Workflow Step '{step*name}': Missing skill or path. Skipping.")

# In a real engine: raise validation error or handle failure state

continue

self.logger.info(f"Workflow Step {i+1}: '{step*name}' -> {method} {skill*name}{path}")

# --- Input/Path Templating (VERY Basic - Needs proper engine) ---

step*input*payload = {}

step*params = {}

processed*path = path

try:

# Resolve path parameters first (e.g., /tickets/$inputs.ticket*id)

processed*path = self.*resolve*workflow*template(path, context, outputs, step*name)

# Resolve input payload/params

input*template*map = step.get("input", {})

resolved*inputs = {}

for key, template*value in input*template*map.items():

resolved*inputs[key] = self.*resolve*workflow*template(template*value, context, outputs, step*name)

if method == "GET":

step*params = resolved*inputs

else:

step*input*payload = resolved*inputs

except Exception as e:

self.logger.error(f"Workflow Step '{step*name}': Failed to resolve inputs/path: {e}")

raise MCPAgentError(f"Workflow input/path resolution failed for step '{step*name}'") from e

# --- Execute Step using SDK ---

try:

result = self.call*skill(

skill*name=skill*name,

path=processed*path,

method=method,

payload=step*input*payload if method not in ["GET", "DELETE"] else None,

params=step*params if method == "GET" else None

)

self.logger.info(f"Workflow Step '{step*name}': Completed successfully.")

# Store output mapped to the step name based on 'output' spec (basic $response)

output*mapping = step.get("output", {})

for output*key, output*path*template in output*mapping.items():

if output*path*template == "$response":

outputs[output*key] = result

else:

# Basic support for selecting sub-fields, e.g., $response.data.score

if isinstance(output*path*template, str) and output*path*template.startswith("$response."):

try:

parts = output*path*template.split('.')[1:] # Skip '$response'

value = result

for part in parts:

if isinstance(value, dict):

value = value.get(part)

elif isinstance(value, list) and part.isdigit():

value = value[int(part)] if 0 <= int(part) < len(value) else None

else: value = None

if value is None: break

outputs[output*key] = value

except Exception as e:

self.logger.warning(f"Workflow Step '{step*name}': Failed to map output path '{output*path*template}': {e}")

outputs[output*key] = None # Set to None on failure

else:

self.logger.warning(f"Workflow Step '{step*name}': Unsupported output mapping '{output*path*template}'. Storing raw response.")

outputs[output*key] = result # Fallback to storing raw response

except Exception as e:

self.logger.error(f"Workflow Step '{step*name}': Execution failed: {e}")

# In real engine: implement retry, error handling, compensation

raise # Re-raise the SDK or RequestError for this sketch

self.logger.info("Workflow sketch finished.")

return outputs # Return accumulated outputs from all steps

def *resolve*workflow*template(self, template: Any, context: Dict, outputs: Dict, step*name: str) -> Any:

"""Extremely basic template resolver for workflow sketch."""

if not isinstance(template, str):

return template # Not a string, return as-is

resolved*value = template

# Replace $inputs.key or $inputs.key.subkey

for match in re.findall(r'\$inputs\.([a-zA-Z0-9*.]+)', resolved*value):

value = self.*get*nested*value(context, match.split('.'))

resolved*value = resolved*value.replace(f"$inputs.{match}", str(value) if value is not None else '')

# Replace $outputs.step*name.key or $outputs.step*name.key.subkey

for match in re.findall(r'\$outputs\.([a-zA-Z0-9*.]+)', resolved*value):

value = self.*get*nested*value(outputs, match.split('.'))

resolved*value = resolved*value.replace(f"$outputs.{match}", str(value) if value is not None else '')

# Handle basic default value || syntax

if "||" in resolved*value:

parts = resolved*value.split("||", 1)

primary = parts[0].strip()

default = parts[1].strip().strip('"').strip("'") # Basic string default

if not primary or primary == 'None' or primary == '':

resolved*value = default

else:

resolved*value = primary # Use the resolved primary value

# Attempt to parse as JSON/number if it looks like one and is the whole string

if resolved*value == template: # Only parse if no substitutions happened

try: return json.loads(resolved*value)

except (json.JSONDecodeError, TypeError): pass # Ignore if not valid JSON

try: return int(resolved*value)

except (ValueError, TypeError): pass

try: return float(resolved*value)

except (ValueError, TypeError): pass

return resolved*value # Return as string if substitutions occurred or parsing failed

def *get*nested*value(self, source: Dict, path*parts: List[str]) -> Any:

"""Helper to get value from nested dict using list of keys."""

value = source

try:

for part in path*parts:

if isinstance(value, dict):

value = value.get(part)

elif isinstance(value, list) and part.isdigit():

idx = int(part)

value = value[idx] if 0 <= idx < len(value) else None

else:

return None # Path doesn't exist or type mismatch

if value is None: return None

return value

except Exception:

return None

def close(self) -> None:

"""Clean up resources (e.g., stop background threads)."""

self.logger.info("Closing MCPAgent SDK...")

self.*running = False

if self.*refresh*thread and self.*refresh*thread.is*alive():

# Give the refresh thread a moment to finish its current sleep/work cycle

self.logger.debug("Waiting for token refresh thread to exit...")

self.*refresh*thread.join(timeout=2.0)

if self.*refresh*thread.is*alive():

self.logger.warning("Token refresh thread did not terminate gracefully.")

self.logger.info("MCPAgent SDK closed.")

## Context manager support for 'with MCPAgent() as agent:'

def _*enter**(self):

return self

def **exit**(self, exc*type, exc*val, exc*tb):

self.close()

# Returning False propagates exceptions from the 'with' block

return False

### Example Usage:

```python

## support*agent.py

import logging

from mcp*agent import MCPAgent, RequestError, AuthenticationError

import os

## Set up logging

logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(name)s - %(levelname)s - %(message)s')

logger = logging.getLogger("support-agent")

## Example: If Vault Agent writes token to a file

def vault*token*provider(token*path="/vault/secrets/mcp-token"):

"""Reads token from a file managed by Vault Agent."""

try:

with open(token*path, 'r') as f:

return f.read().strip()

except FileNotFoundError:

logger.error(f"Vault token file not found at {token*path}")

return None

except Exception as e:

logger.error(f"Error reading Vault token file: {e}")

return None

def process*ticket(ticket*id, customer*id):

"""Process a support ticket using the agent infrastructure."""

# Initialize the agent SDK.

# Option 1: Use internal Auth0 flow (requires env vars set)

# agent*provider = None

# Option 2: Use external token provider (e.g., Vault Agent)

agent*provider = vault*token*provider

try:

# Using 'with' ensures agent.close() is called for cleanup

with MCPAgent(token*provider=agent*provider) as agent:

logger.info("MCPAgent initialized.")

# Step 1: Look up customer information using a 'skill'

logger.info(f"Looking up customer {customer*id}...")

customer = agent.call*skill(

"customer-lookup", # Target service name

"/customer", # Path on that service

payload={"customer*id": customer*id}, # Body for POST

method="POST"

)

logger.info(f"Retrieved customer: {customer.get('name', 'N/A') if isinstance(customer, dict) else customer}")

# Step 2: Get ticket content from a 'data source' (using GET helper)

logger.info(f"Getting ticket {ticket*id}...")

ticket = agent.get*data(

"support-system",

f"/tickets/{ticket*id}" # Path with ticket ID

# params={"expand": "details"} # Optional query params

)

logger.info(f"Retrieved ticket: {ticket.get('subject', 'N/A') if isinstance(ticket, dict) else ticket}")

# Step 3: Analyze sentiment using another 'skill'

logger.info("Analyzing sentiment...")

sentiment = agent.call*skill(

"sentiment-analysis",

"/analyze",

payload={"text": ticket.get("description", "") if isinstance(ticket, dict) else ""}

)

logger.info(f"Sentiment score: {sentiment.get('score', 'N/A') if isinstance(sentiment, dict) else sentiment}")

# Step 4: Generate response (example)

logger.info("Generating response...")

response*payload = {

"ticket": ticket,

"customer": customer,

"sentiment": sentiment,

"language": customer.get("preferred*language", "en") if isinstance(customer, dict) else "en"

}

response = agent.call*skill(

"response-generator",

"/generate",

payload=response*payload

)

logger.info(f"Generated response (partial): {str(response)[:100]}...")

# Step 5: Write response back (using PUT helper)

logger.info(f"Writing response to ticket {ticket*id}...")

update*payload = {"response": response.get("text", "") if isinstance(response, dict) else str(response)}

write*result = agent.write*data(

"support-system",

f"/tickets/{ticket*id}/responses", # Path for writing response

payload=update*payload,

method="PUT" # Or POST depending on API design

)

logger.info(f"Wrote response to ticket {ticket*id}. Result: {write*result}")

# Return key results of the process

return {

"ticket*id": ticket*id,

"customer*id": customer*id,

"sentiment*score": sentiment.get("score") if isinstance(sentiment, dict) else None,

"response*snippet": (response.get("text", "")[:50] + "...") if isinstance(response, dict) and response.get("text") else None

}

except AuthenticationError as e:

logger.error(f"Authentication failed: {e}", exc*info=True)

raise

except RequestError as e:

logger.error(f"Request failed for ticket {ticket*id}: {e}", exc*info=True)

raise # Re-raise the specific error

except Exception as e:

logger.error(f"An unexpected error occurred during ticket processing for {ticket*id}: {e}", exc*info=True)

raise # Re-raise unexpected exceptions

if **name** == "**main**":

# Example usage:

ticket*id = "TKT-1234"

customer*id = "CUST-5678"

# Check if using internal Auth0 flow and warn if creds are missing

# (Modify this check based on whether you expect internal or external token mgmt)

# if not os.getenv("VAULT*TOKEN*PATH") and not all([os.getenv("MCP*CLIENT*ID"), os.getenv("MCP*CLIENT*SECRET"), os.getenv("AUTH0*DOMAIN")]):

# logger.warning("No external token provider path (e.g., VAULT*TOKEN*PATH) and missing Auth0 creds.")

# logger.warning("Ensure authentication is handled externally or provide MCP*CLIENT*ID, MCP*CLIENT*SECRET, AUTH0*DOMAIN.")

# For local demo with docker-compose, ensure these are set if using internal flow:

# os.environ['MCP*CLIENT*ID'] = 'demo-agent'

# os.environ['MCP*CLIENT*SECRET'] = 'demo-secret'

# os.environ['AUTH0*DOMAIN'] = 'auth-mock:8080'

try:

result = process*ticket(ticket*id, customer*id)

logger.info(f"Ticket processed successfully: {result}")

except Exception:

logger.error("Ticket processing failed.")

### Declarative Workflow Definition (Sketch):

This YAML defines a simple sequence of steps. It's a basic illustration of the *concept* of declarative workflows, which would be executed by a specialized workflow engine component built *on top of* the Agent SDK. The `run*workflow*sketch` method within the `MCPAgent` class shows how such an engine *might* leverage the SDK.

```yaml

## support*workflow.yaml

name: support-ticket-triage